Battlefield 4 Results

The GTX 970 memory issue led our readers to request testing of the cards paired up in SLI. Here you go.

At the end of my first Frame Rating evaluation of the GTX 970 after the discovery of the memory architecture issue, I proposed the idea that SLI testing would need to be done to come to a more concrete conclusion on the entire debate. It seems that our readers and the community at large agreed with us in this instance, repeatedly asking for those results in the comments of the story. After spending the better part of a full day running and re-running SLI results on a pair of GeForce GTX 970 and GTX 980 cards, we have the answers you're looking for.

Today's story is going to be short on details and long on data, so if you want the full back story on what is going on why we are taking a specific look at the GTX 970 in this capacity, read here:

- Part 1: NVIDIA issues initial statement

- Part 2: Full GTX 970 memory architecture disclosed

- Part 3: Frame Rating: GTX 970 vs GTX 980

- Part 4: Frame Rating: GTX 970 SLI vs GTX 980 SLI (what you are reading now)

Okay, are we good now? Let's dive into the first set of results in Battlefield 4.

Battlefield 4 Results

Just as I did with the first GTX 970 performance testing article, I tested Battlefield 4 at 3840×2160 (4K) and utilized the game's ability to linearly scale resolution to help me increase GPU memory allocation. In the game settings you can change that scaling option by a percentage: I went from 110% to 150% in 10% increments, increasing the load on the GPU with each step.

Memory allocation between the two SLI configurations was similar, but not as perfectly aligned with each other as we saw with our single GPU testing.

In a couple of cases, at 120% and 130% scaling, the GTX 970 cards in SLI are actually each using more memory than the GTX 980 cards. That difference is only ~100MB but that delta was not present at all in the single GPU testing.

Our performance data is being broken up into two sets: the GTX 980s in SLI running at all five of our scaling settings and, separately, the GTX 970s in SLI running on the same five scaling settings. Plotting 10 sets of data on a single graph proved to be a a bit too crowded, so we'll show the graphs successively to help you compare them more easily.

Unlike our first sets of results the SLI numbers are ALMOST in a playable state, making them much more real-world than before. The first thing I noticed when compiling this data was that the GTX 980 cards in SLI actually had a couple of more downward spikes in frame rate at 150% scaling than the GTX 970s did. I did confirm this was a regular pattern by re-running tests on both sets of hardware about six times, and the bright green line you see in the first graph above is actually one of the better results for the 980s.

It appears though that moving from 110% to 150% scaling results in the expected frame rate decreases in both configurations.

Average frame rates are where we expect them to be: the GTX 980 SLI is faster than the GTX 970 SLI by fairly regular margins.

| GTX 980 | GTX 970 | % Difference | |

|---|---|---|---|

| 1.10x Scaling | 47.3 FPS | 41.0 FPS | -15% |

| 1.20x Scaling | 41.1 FPS | 35.8 FPS | -15% |

| 1.30x Scaling | 35.4 FPS | 31.2 FPS | -13% |

| 1.40x Scaling | 31.0 FPS | 27.7 FPS | -12% |

| 1.50x Scaling | 27.7 FPS | 24.6 FPS | -13% |

The percentage differences here are actually more reliable than the single GPU results, which is a bit of a surprise to us. The GTX 970s in SLI range from 12-15% slower than the GTX 980s in SLI, but as we know from our years of GPU evaluation, that isn't the whole story.

Click to Enlarge

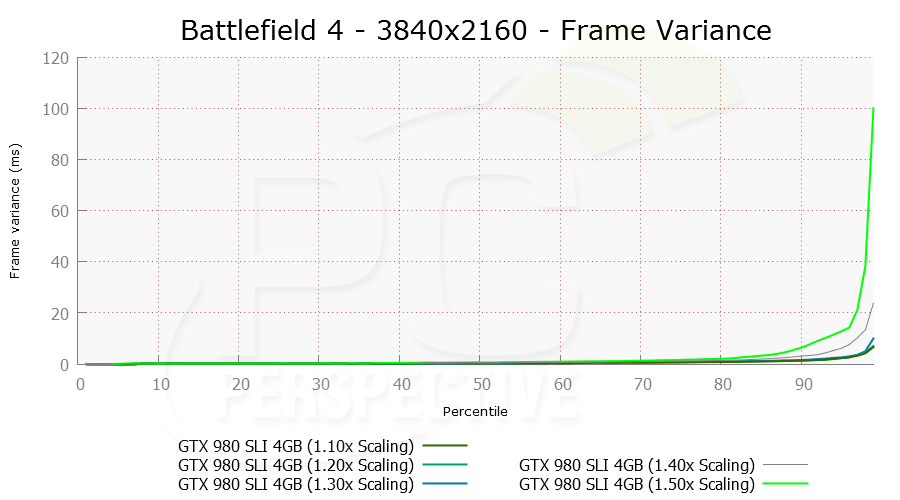

It should be painfully obvious that at 150% scaling the GTX 970s in SLI have a significant amount of frame time variance that is mostly limited with the GTX 980s in SLI. Even at 140% scaling, looking at the thinner gray line, you can see differences in the behavior of the frame time lines in the two graphs (you can click to enlarge them for a closer view).

Before you start eyeballing these graphs, make sure you take note of the slightly different y-axis on the left hand side between them - that's important. Focusing on the highest 10% of frame times in our testing, the GTX 970s clearly exhibit more issues than the GTX 980s. The flagship cards only see about 5ms of variance at the 90th percentile and crosses the 20ms mark somewhere in the 96-97th percentile. The GTX 970s in SLI though reach those marks much sooner - at the 90th percentile we see as much as 18ms frame variance and at the 96th percentile that reaches as high as ~40ms.

Nobody cares about the .5…

Nobody cares about the .5… Just the basement lemmings, busy jumping on the wagon.

99.9% will not run into an issue and will be upgrading in 1-2 years anyway.

Arguing over this topic is a waste of time and makes most of you look like pretentious children looking to argue.

Go play a game! Not like your having any issues, if you even have this card in the first place

I’m running two G1 970s on my

I’m running two G1 970s on my old SB 2600k rig. I’d intended to upgrade my system to Haswell E/DDR4 this winter after bioses matured and ram prices fell, but when one of my 570s in SLI died, I replaced it with my first 970 in November, based completely on published reviews, thinking, perhaps, it could be incorporated into my new build. Singly the 970 bested my 570s together in my usual benchmarks, and it overclocked well. My wife then encouraged me to get another for Christmas, so I did, intending to bench and OC the cards, working out the bugs, then install them in my new system later this year. While I have no particular gripe with their performance in my SB machine with my older games, I have a huge gripe with Nvidia for their screw-up.

I’d planned to build my new rig to last at least 3 years, and put the money in these two GPUs with that in mind (over $800 w/ tax for a pair G1s at Microcenter). Well, now that investment seems wasted. Had I kept just one 970 in this obsolescent machine to eke out another 6 months till Broadwell Ks arrived, that would have been an expensive, but tolerable investment, and would have kept this SB system current enough to give away. Now, however, having invested so much of my alloted cash into the video component, I’ll have to delay my new rig to save more money or build it with GPUs lacking the longevity of the rest of its components. This is very unsatisfying. Instead of a of a near-peak rig that will take me nearly to the end of the decade, I’ll probably install gimped GPUs that will need to be replaced during its lifetime and send my old SB machine to the graveyard. . .

I don’t blame Microcenter, Gigabyte, or the reviewers, I assign the blame squarely with Nvidia for putting profit before performance–promising the latter while reaping the former–and myself for thinking that this performance/price ratio was a little too good to be true.

Nvidia’s success in putting

Nvidia’s success in putting so much performance into such quiet and efficient cards with much less memory bandwidth etc is well known.

One question comes to me every time this is mentioned at the latest Maxwell review.. What could Nvidia achieve if they took the shackles off, upped the memory to 6 or 8GB and increased memory interface from 265 to 384 or 512bit, using the hardware/firmware advances that made the 970/980 so fast/efficient?

I suspect 2015 will be VERY interesting.. I was ready to buy a 980 until I pondered this and the probable 4k single card performance.

Bring on the GTX990 !

Typo of course, 256 not 265.

Typo of course, 256 not 265.

Performance being the same as

Performance being the same as it was from the beginning is irrelevant. Performance not suffering except in extremely rare circumstances is also irrelevant. Lying is lying. Nvidia lied. Even worse, they knew the specs were a lie and chose to say or do nothing about until they were forced to admit it. So, again, lying is lying. And it is wrong. If you excuse or try to diminish that fact then don’t be surprised and don’t complain in the future when you are being lied to more and more frequently.

I changed my pair of GTX 970

I changed my pair of GTX 970 used for SLI.

The Reason ? They STUTTER LIKE HELL. There is NO WAY to play anything that makes use ( or try to ) of the slower 512MB’s segment.

Single GPU uses above 3.5GB’s of VRAM ( In a different way, but the card uses ).

SLI CAN’T go further 3.5GB’s mark, only using DSR or resources alike it.

So, this review is CRAP, you just say that the GTX 970 SLI performs worse than GTX 980 SLI, but you DON’T Mention the stutter. Why ? You can’t lose your nVidia contracts ?

I Doubt you tested during hours like you said.

I Single run using Shadow Of Mordor in Ultra Settings will BREAK the GTX 970 SLI.

So the real information is: GTX 970 SLI IS BROKEN ABOVE 3.5GB’s VRAM usage.

I’ve upgraded my pair to two GTX 980, and guess what !?!?!? NO MORE STUTTERS in SLI AT ALL.

Magic ?

I was expecting a HONESTLY review … Not a paid one trying to “hide” the real deal with these BROKEN cards in SLI. SAD.

This user did a cool tests ( Usefull ones ) reporting the same experience I HAD:

http://www.reddit.com/r/pcmasterrace/comments/2tuqd4/i_benchmarked_gtx_970s_in_sli_at_1440p_and_above/

No more craps from nVidia and their “paid” reviewers.

Sorry for my English, not my native language.

https://forums.geforce.com/de

https://forums.geforce.com/default/topic/820753/geforce-900-series/gtx-970-sli-/1/

@ Ryan Shrout – another

@ Ryan Shrout – another excellent article. Thank you for the great breakdown of this contraversial performance issue. IMHO, the arrival of the GTX980Ti will help eliminate my particular decision with regards to which video card to buy. My bet is to buy the GTX980s once they drop in price after the GTX980Ti is released. I can already see all those like-new used GTX980s on sale in the Amazon marketplace. For me, it’s a win-win situation and I don’t have to pay the full “drive off the lot” price. I sit here patiently waiting for that day 😀

Wow The 970 and 980 are very

Wow The 970 and 980 are very close in SLI! I would say forget about SLI for the 980! Regardless of Nvidia being scumbags on the V-Ram, The 970 is still a very good performing GPU compared to it’s bigger brother the 980. And the price still makes it the High End GPU of choice. I think I might SLI mine now.

Eh yo yo! Yeah Yo! Like yo

Eh yo yo! Yeah Yo! Like yo and eh I uh ahh izza you know what I am sayin yo? yeha! So like yo I am like a badazz gamer yo! Yeah! I got everything! I already got a classified 980 Ti yo because I like party with like the owner of Nvidia yo! Yeah! And yo I gots like 20 for free yo! Yeah!

So in other words a single

So in other words a single 970 is fine, But it’s a waste of money to SLI them. My last (650 Ti) Nvidia card had no SLI option, So it kinda pisses me off that that I pay out the wazoo for a 970, and still no sensible SLI option. I should have stayed with my R9 270 until the price of the R9 390’s went down!